DEB安装

安装es

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo gpg --dearmor -o /usr/share/keyrings/elasticsearch-keyring.gpg

sudo apt-get install apt-transport-https

echo "deb [signed-by=/usr/share/keyrings/elasticsearch-keyring.gpg] https://artifacts.elastic.co/packages/8.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-8.x.list

# 安装最新版本

sudo apt-get update && sudo apt-get install elasticsearch

# 安装指定版本

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-8.3.3-amd64.deb

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-8.3.3-amd64.deb.sha512

shasum -a 512 -c elasticsearch-8.3.3-amd64.deb.sha512

sudo dpkg -i elasticsearch-8.3.3-amd64.deb

配置系统信息

#打开系统配置文件

vim /etc/sysctl.conf

#增加配置

vm.max_map_count=262144

#保存

:wq

#执行命令

sysctl -w vm.max_map_count=262144

vim /etc/security/limits.conf

#增加以下配置,注意*号要留着

* soft nofile 65536

* hard nofile 131072

* soft nproc 4096

* hard nproc 4096

* hard memlock unlimited

* soft memlock unlimited

vim /etc/systemd/system.conf

#修改以下配置

DefaultLimitNOFILE=65536

DefaultLimitNPROC=32000

DefaultLimitMEMLOCK=infinity

#关闭交换空间

swapoff -a

证书位置:/etc/elasticsearch/certs/http_ca.crt

修改数据存储地址

mkdir -p /data/elasticsearch/data

mkdir -p /data/elasticsearch/logs

chmod -R 777 /data/elasticsearch/data /data/elasticsearch/logs

修改配置

vim /etc/elasticsearch/elasticsearch.yml

cluster.name: es-cluster

node.name: es01

path.data: /data/elasticsearch/data

path.logs: /data/elasticsearch/logs

network.host: 0.0.0.0

http.port: 9200

discovery.seed_hosts: ["192.168.0.29", "192.168.0.30"]

cluster.initial_master_nodes: ["es01", "es02"]

bootstrap.system_call_filter: false

bootstrap.memory_lock: true

配置systemd

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch.service

启动主节点

sudo systemctl start elasticsearch.service

换证书

将从节点的证书和key删除,将主节点的证书和key传给从节点

scp -rp certs root@192.168.0.30:/etc/elasticsearch/

scp elasticsearch.keystore root@192.168.0.30:/etc/elasticsearch/

启动从节点

sudo systemctl start elasticsearch.service

测试

curl --cacert /etc/elasticsearch/certs/http_ca.crt -u elastic 'https://localhost:9200/_cat/nodes?v=true&pretty'

Authentication and authorization are enabled.

TLS for the transport and HTTP layers is enabled and configured.

The generated password for the elastic built-in superuser is : K7-8f0CjVuYuPfJzffVq

If this node should join an existing cluster, you can reconfigure this with

'/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token <token-here>'

after creating an enrollment token on your existing cluster.

You can complete the following actions at any time:

Reset the password of the elastic built-in superuser with

'/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic'.

Generate an enrollment token for Kibana instances with

'/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana'.

Generate an enrollment token for Elasticsearch nodes with

'/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s node'.

eyJ2ZXIiOiI4LjYuMiIsImFkciI6WyIxOTIuMTY4LjAuMjk6OTIwMCJdLCJmZ3IiOiJmYzQxYzI5ZjU1NmJiMDZmYWVjODMzMWM0ZjU1ZGY4M2Y2YzA3MTdmYjcyYjk4NDk3ZDU0N2UxZWVjOWM1ZWVlIiwia2V5IjoiNGZGTW9ZWUJwcjhMdzFKY1k1aVo6bkhwa0hxZzdROW1ibmtCM3dMMlVvdyJ9

安装kibana

wget https://artifacts.elastic.co/downloads/kibana/kibana-8.3.3-amd64.deb

shasum -a 512 kibana-8.3.3-amd64.deb

sudo dpkg -i kibana-8.3.3-amd64.deb

生成token

/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana

配置远程访问

server.host: "192.168.0.29"

启动

sudo /bin/systemctl daemon-reload

sudo /bin/systemctl enable kibana.service

sudo systemctl start kibana.service

打开页面,输入token,如:

eyJ2ZXIiOiI4LjMuMyIsImFkciI6WyIxOTIuMTY4LjAuMjk6OTIwMCJdLCJmZ3IiOiI0NmJlYjlkNDIzODZmNWY1NWU4NTY3NTZjMjk1ZDJkN2NkMzEwNzFlZGMwOTM5YWI3NmQ4M2U2MTJiNDBmOWUxIiwia2V5Ijoid05Cd3BvWUJqaHNTbnNsOHJtX0I6LXY5cjJKVG5TRU84SzRuamY5cnRSUSJ9

获取code

/usr/share/kibana/bin/kibana-verification-code

修改elastic密码

继续修改配置,增加elastic节点和中文、加密密钥

生成加密密钥

/usr/share/kibana/bin/kibana-encryption-keys generate

elasticsearch.hosts: ['https://192.168.0.29:9200','https://192.168.0.30:9200']

i18n.locale: "zh-CN"

xpack.encryptedSavedObjects.encryptionKey: 'fhjskloppd678ehkdfdlliverpoolfcr'

xpack.actions.preconfiguredAlertHistoryEsIndex: true

重启

systemctl restart kibana.service

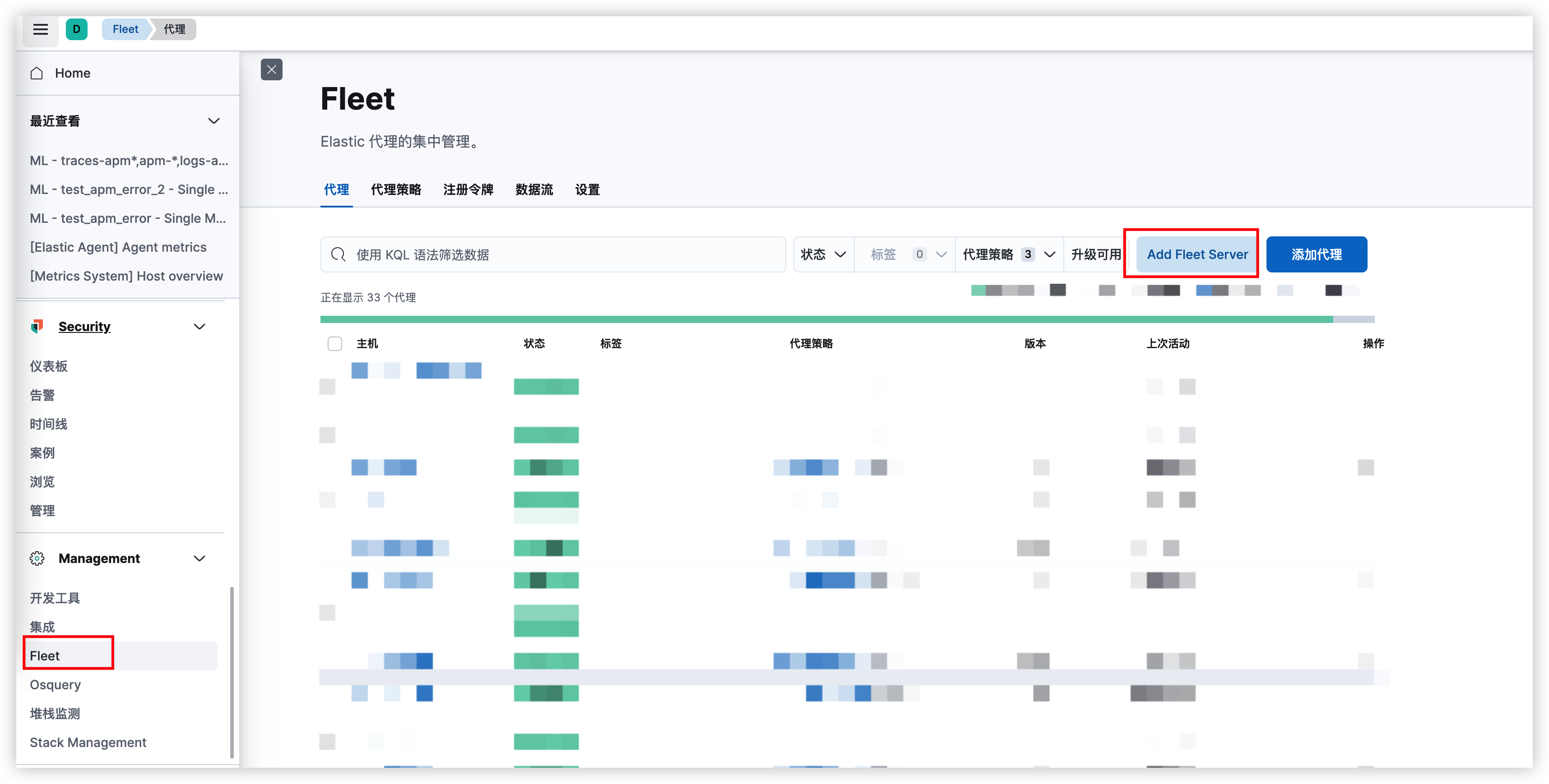

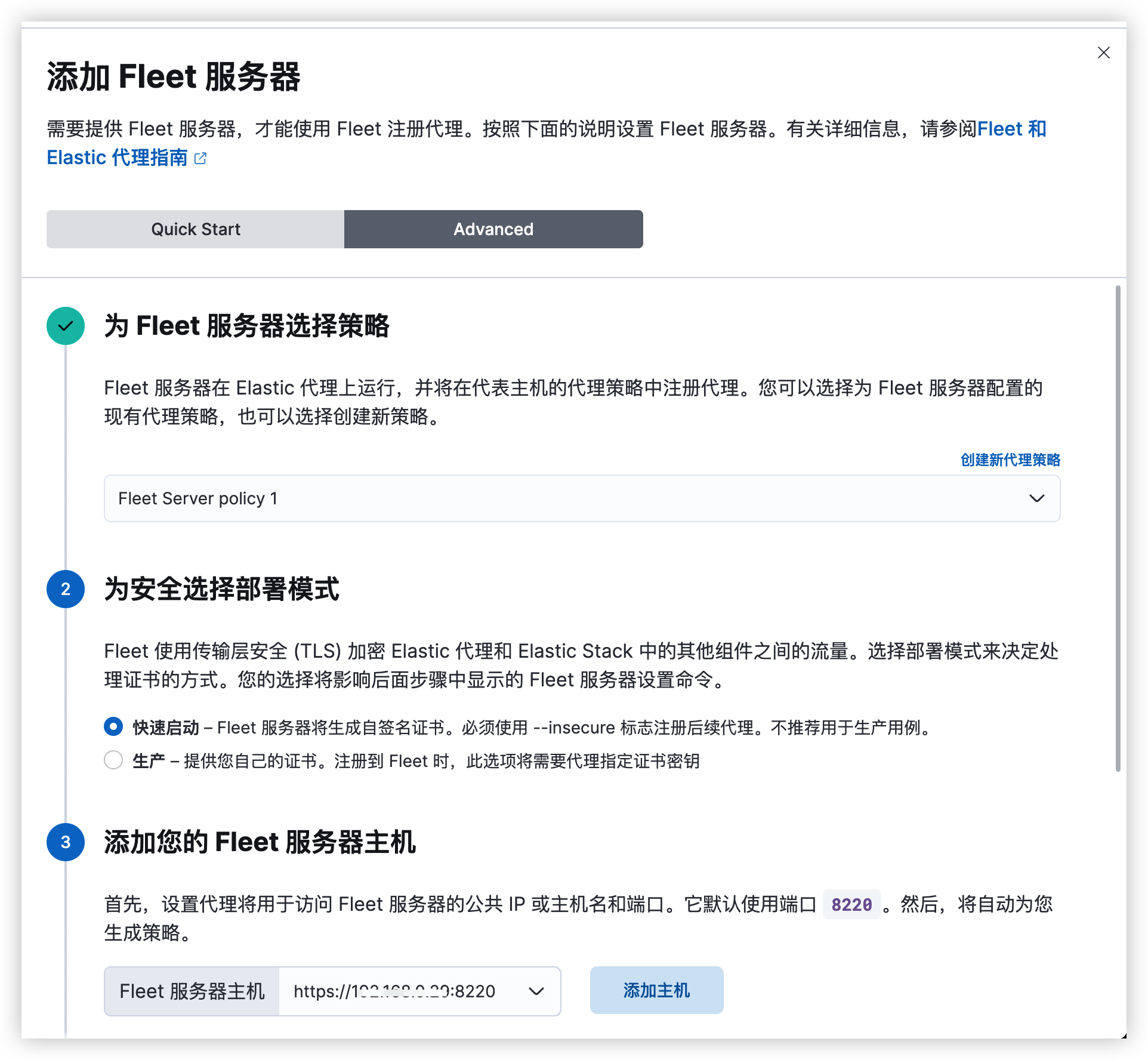

安装Fleet

直接创建fleet服务器即可

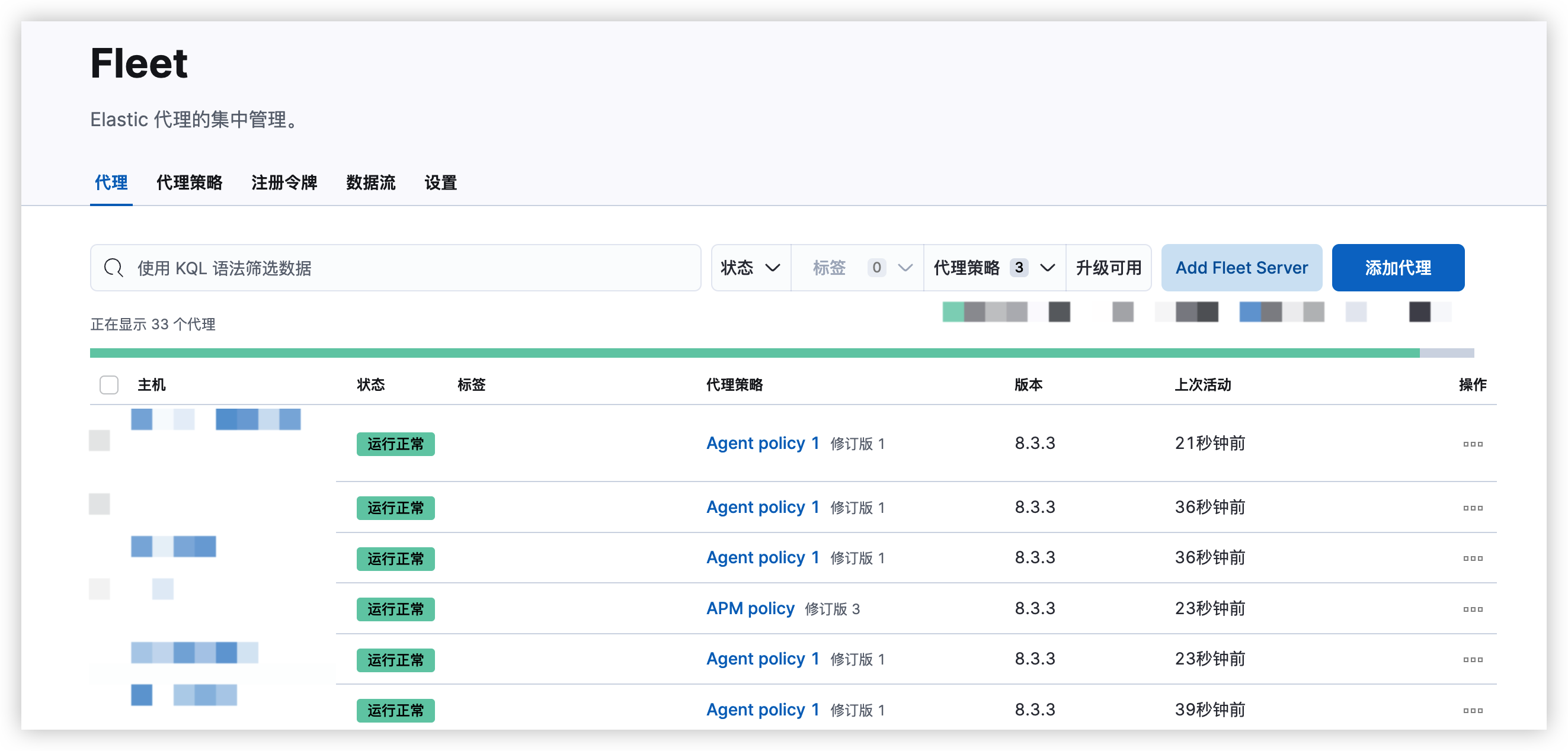

添加代理

复制elastic中的命令,在最后加上--insecure

curl -L -O https://artifacts.elastic.co/downloads/beats/elastic-agent/elastic-agent-8.3.3-linux-x86_64.tar.gz

tar xzvf elastic-agent-8.3.3-linux-x86_64.tar.gz

cd elastic-agent-8.3.3-linux-x86_64

sudo ./elastic-agent install --url=https://192.168.0.29:8220 --enrollment-token=xxxx --insecure

运行结束后可在界面上查看:

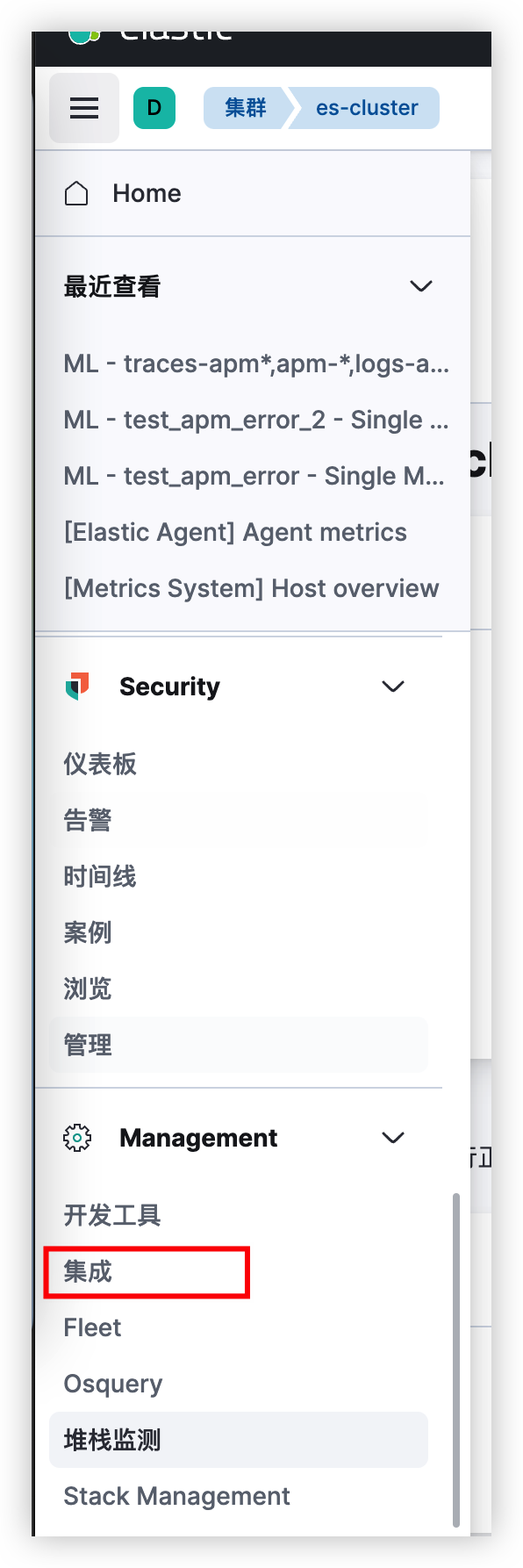

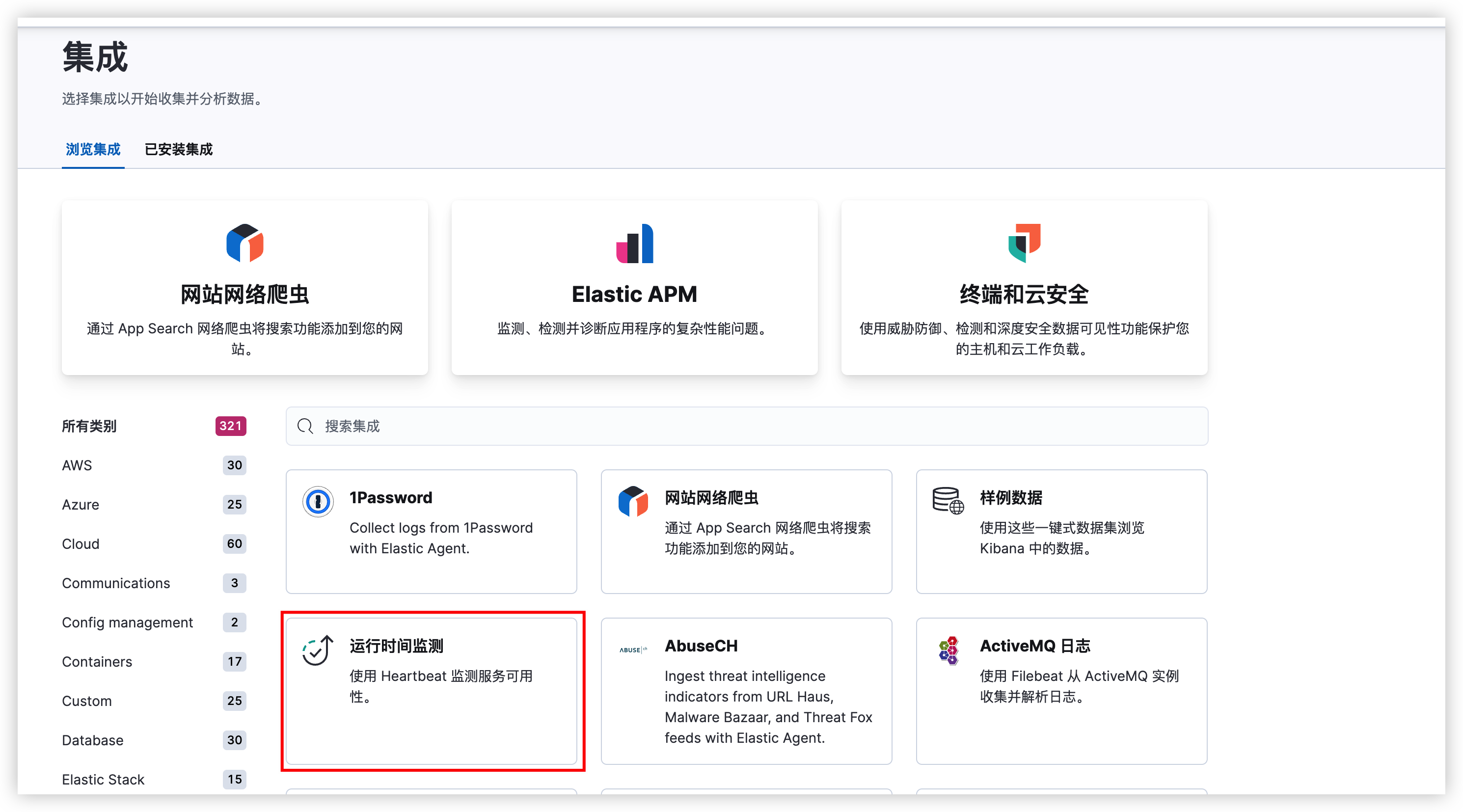

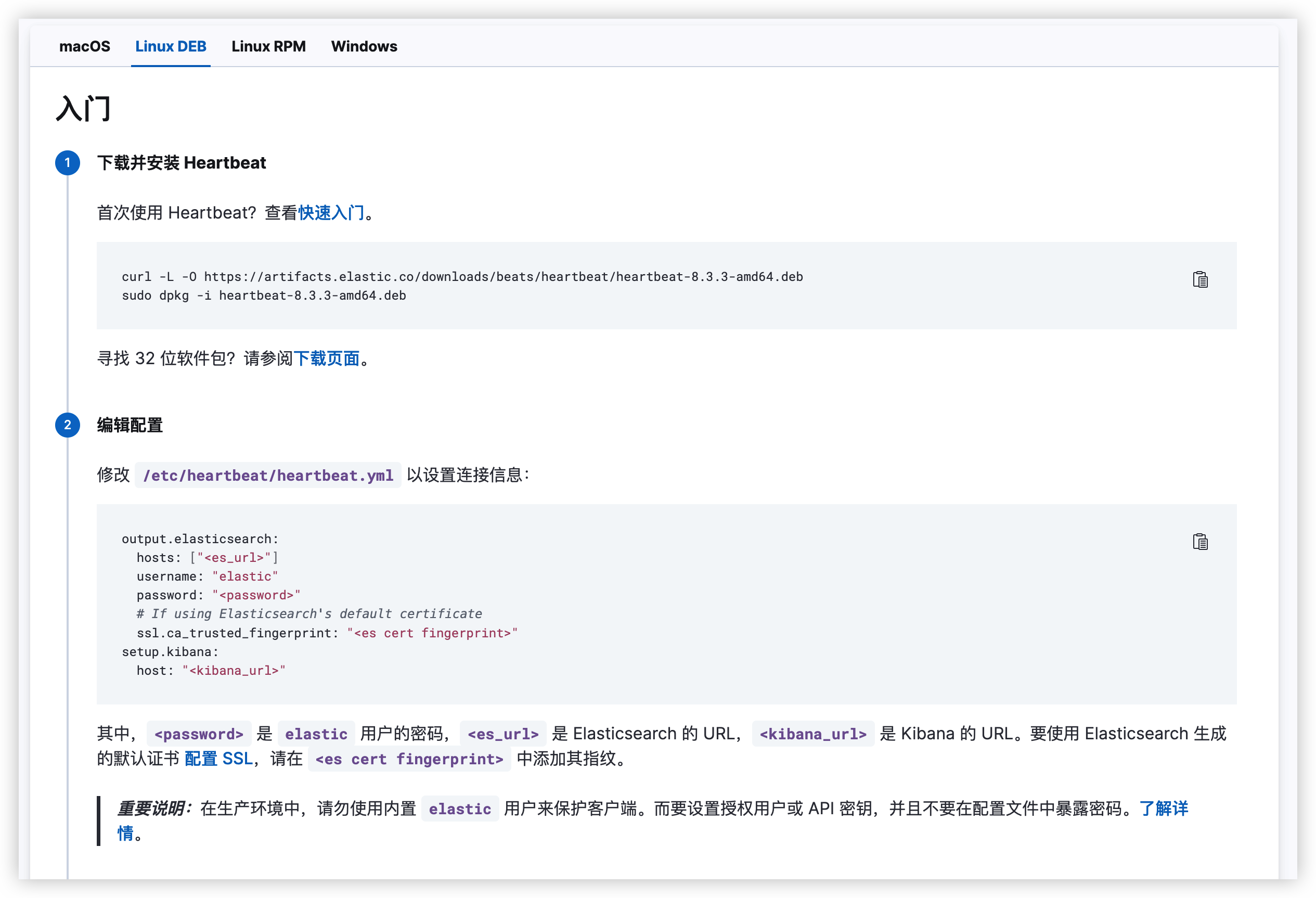

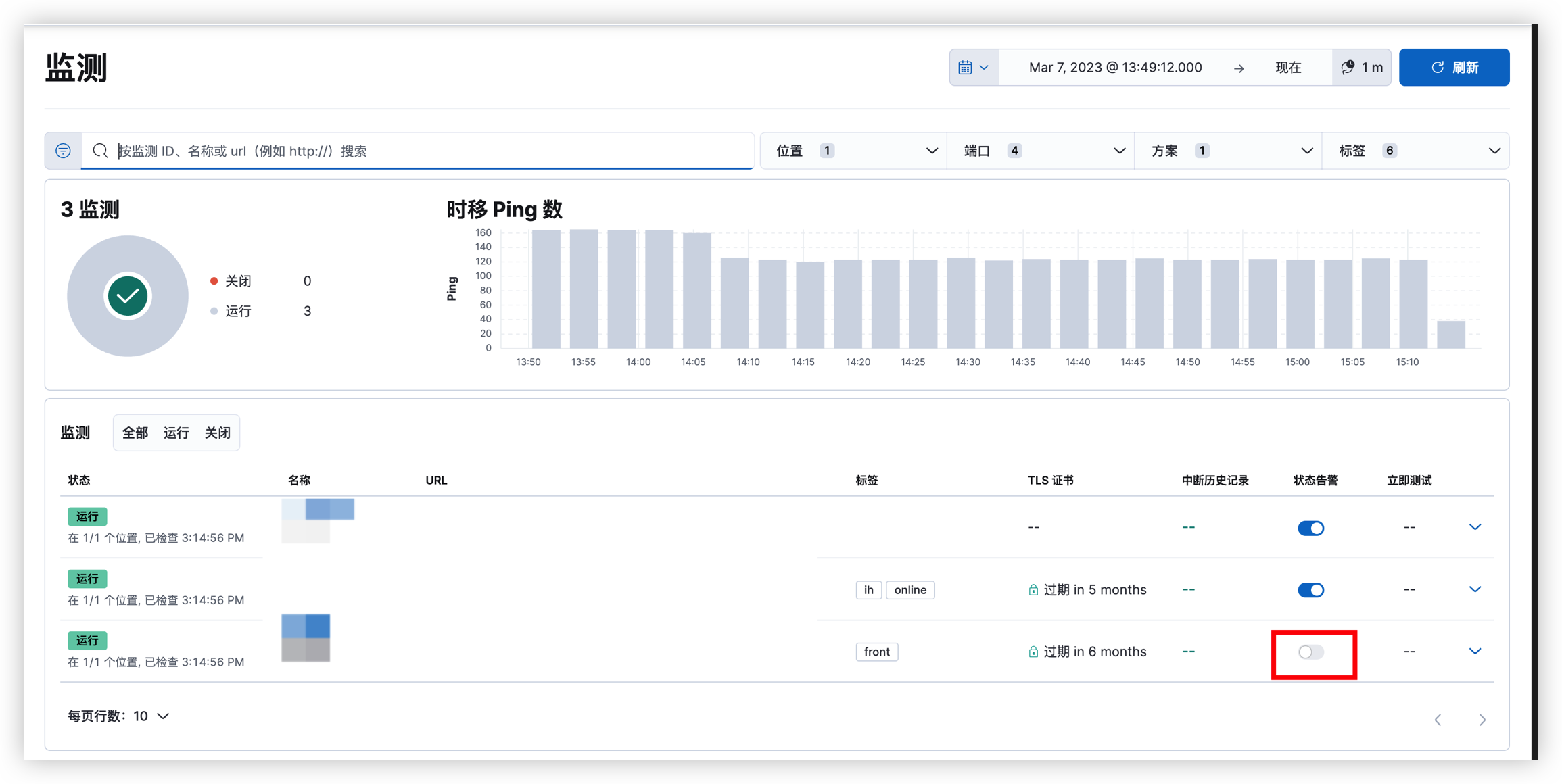

设置监测

根据命令安装即可:

api_key可在Stack Management -> api_key中生成,指纹可在Fleet -> 设置 -> elasticsearh中查看

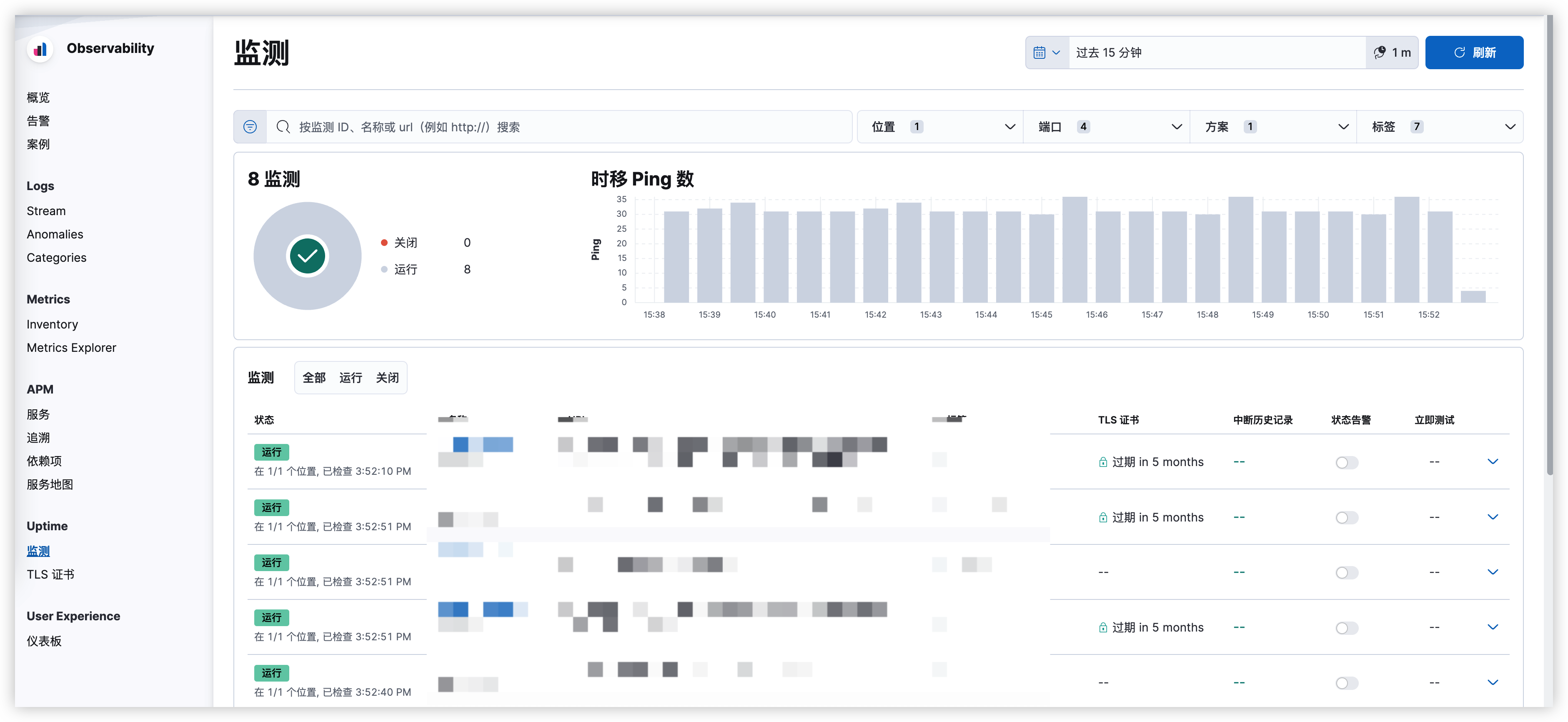

配置站点监测:

进入对应目录

cd /etc/heartbeat/monitors.d

cpoy一份配置文件,如

cp sample.http.yml youfile.yml

编辑配置文件

- type: http # 协议

id: your-server # 监控id

name: 测试监控 # 监控名称

enabled: true

schedule: '@every 5s' # 每5s发一次请求

hosts: ["https://baidu.com"] #监控地址,可以写多个

ipv4: true

ipv6: true

mode: any

#timeout: 16s #超时时间,默认16s

#username: ''

#password: ''

supported_protocols: ["TLSv1.0", "TLSv1.1", "TLSv1.2"]

method: "GET"

# 检查响应

check.response:

#状态码,写200表示200为正常

status: 200

#json:

#- description: Explanation of what the check does

# condition:

# equals:

# myField: expectedValue

tags: ["my-server","dev"] #tag

如果你还想检查响应结果,可以使用json配置

配置好后,可在监测中查看

设置告警

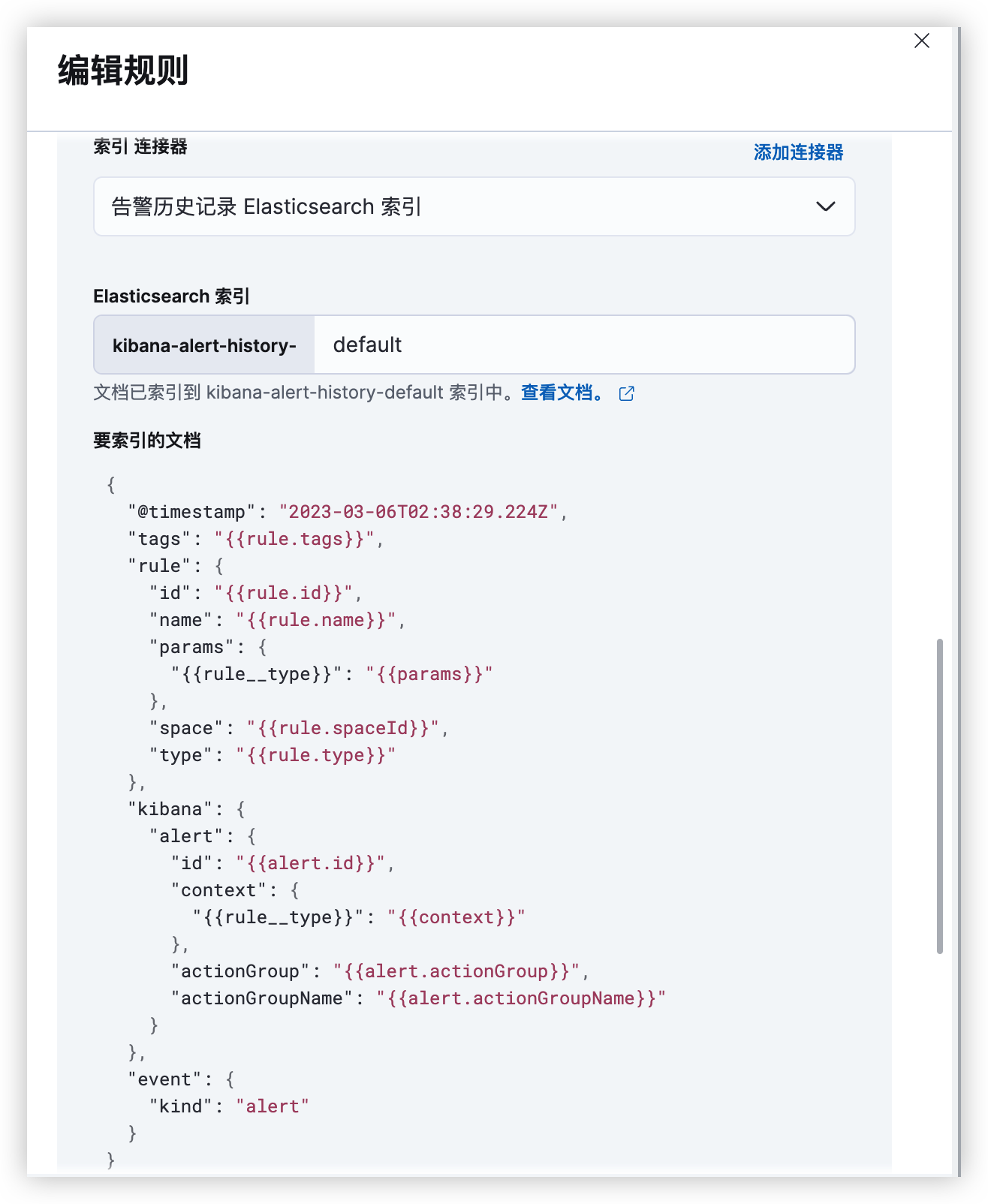

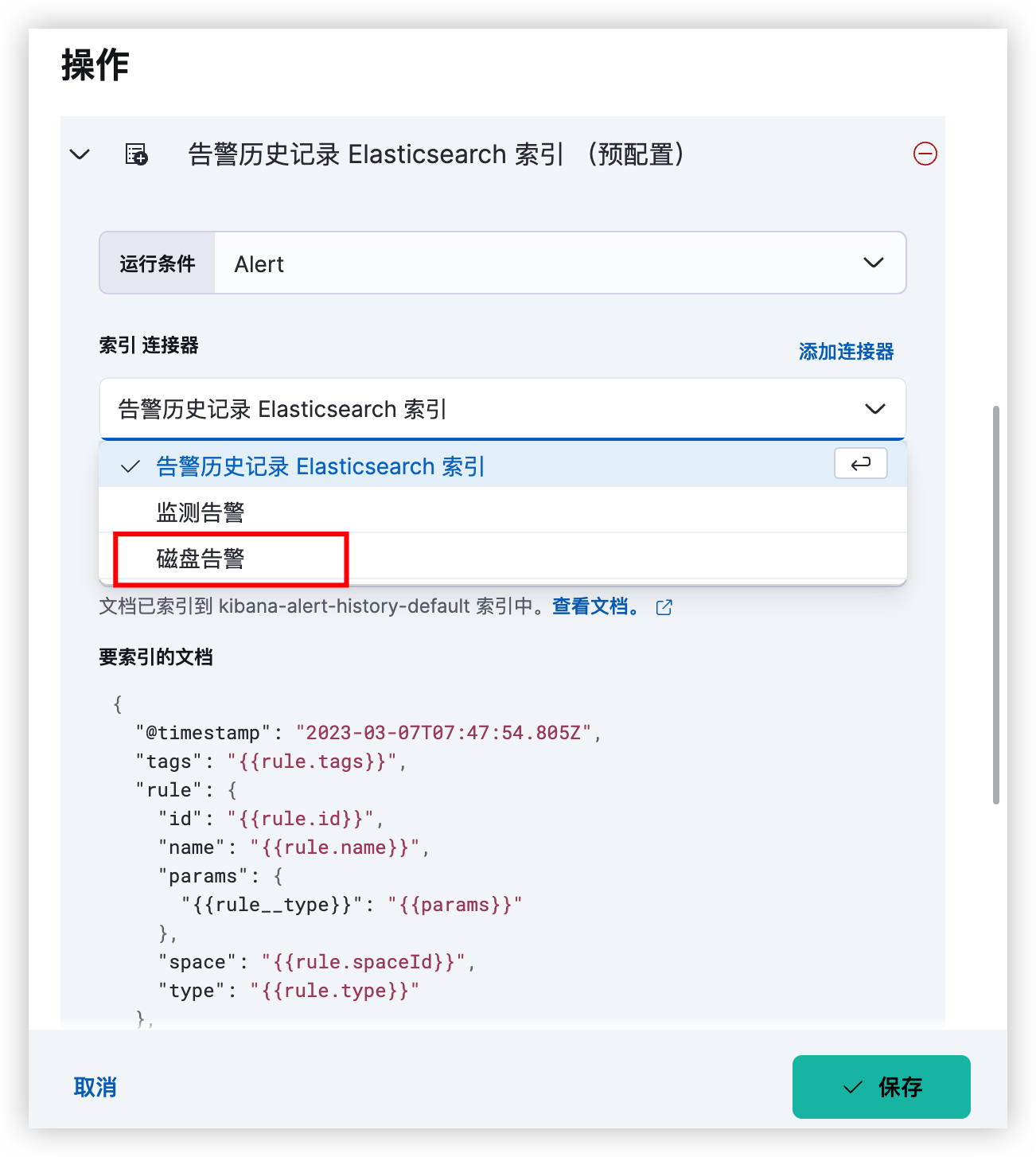

使用告警索引模板

在kibana配置文件中添加:

xpack.actions.preconfiguredAlertHistoryEsIndex: true

重启kibana

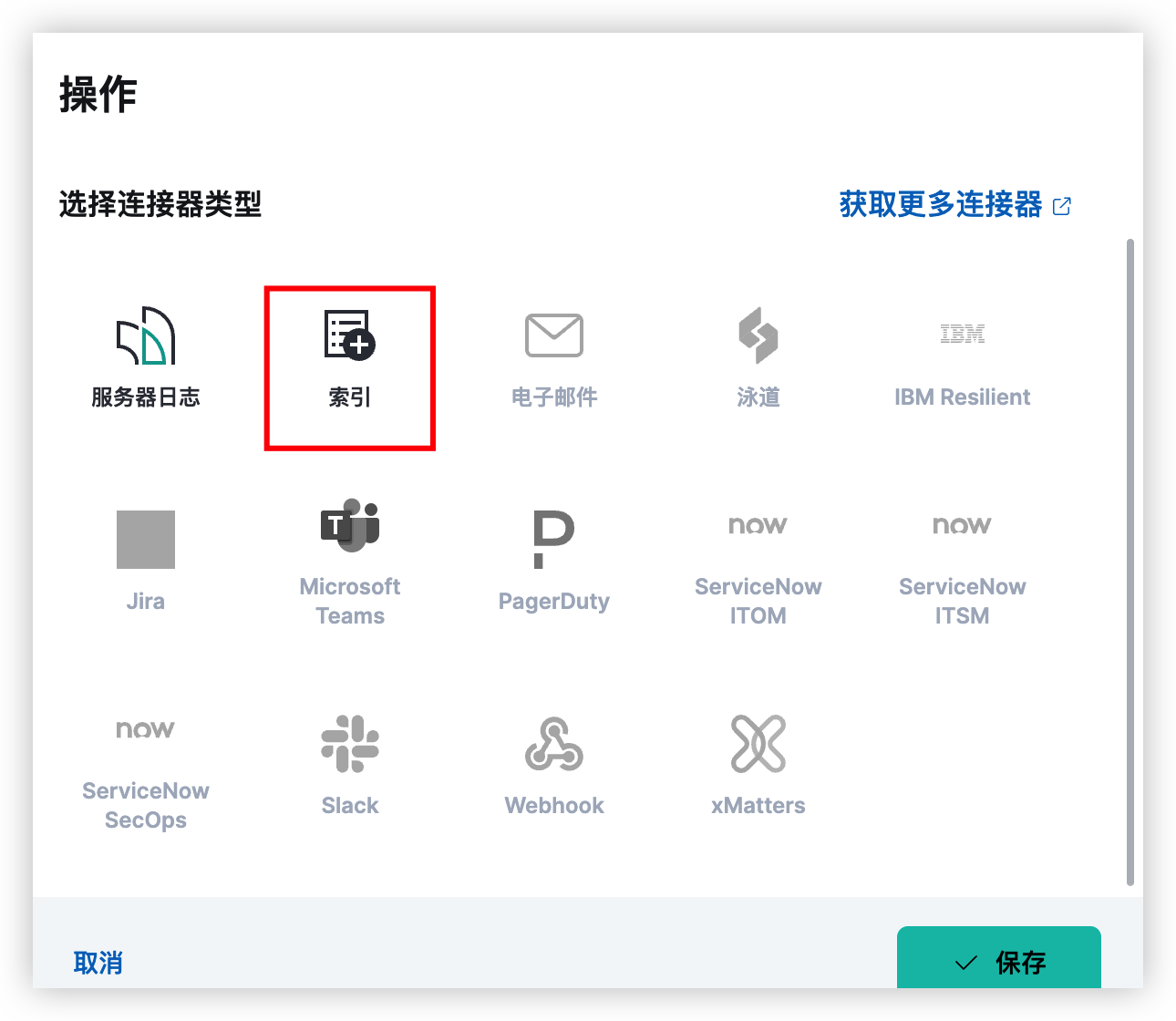

此时添加告警规则时会出现一个es提供的索引连接器

将该索引模板接入生命周期

在开发工具中添加别名

PUT kibana-alert-history-alert-000001

{

"aliases": {

"kibana-alert-history-alert": {

"is_write_index": true

}

}

}

在生命周期管理中添加该索引

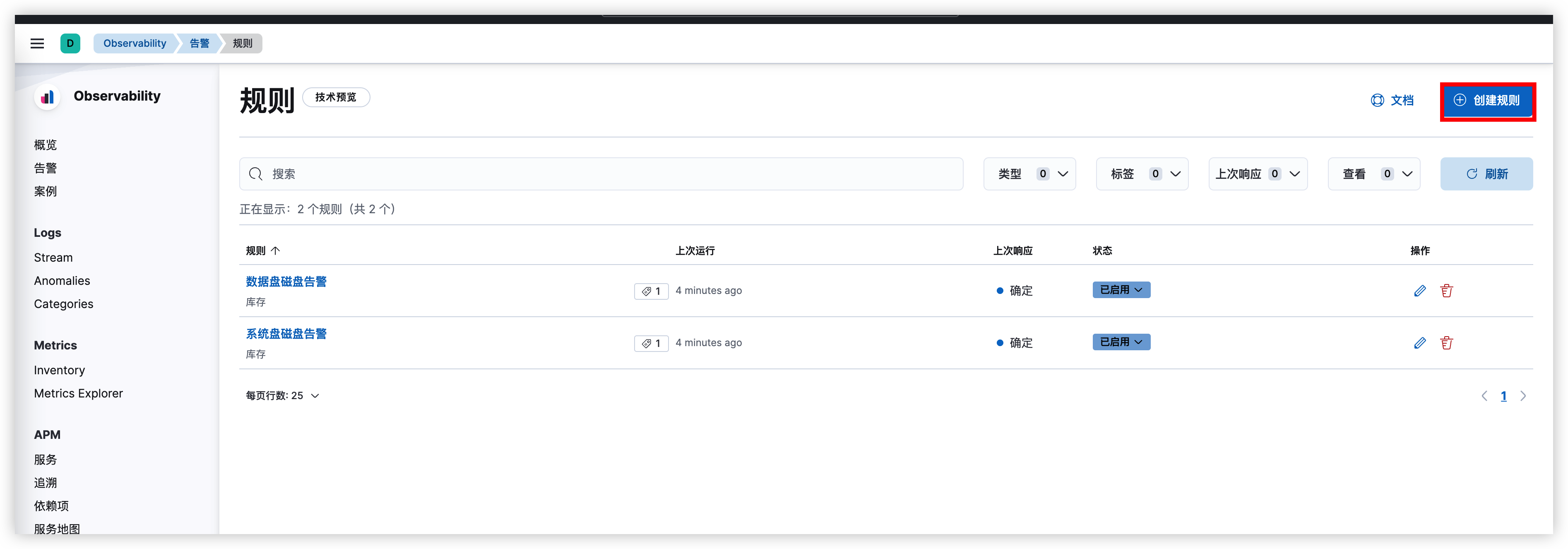

创建告警规则

当触发告警后将会往索引中写入数据。

如果监听索引的增量更新可以使用logstash, 见下文:logstash监听告警索引并推送至企业微信

免费版只支持日志和索引两种连接器,如何开启白金版学习版,请关注公众号:程序员阿紫,回复:Elastic白金版

端点监测告警

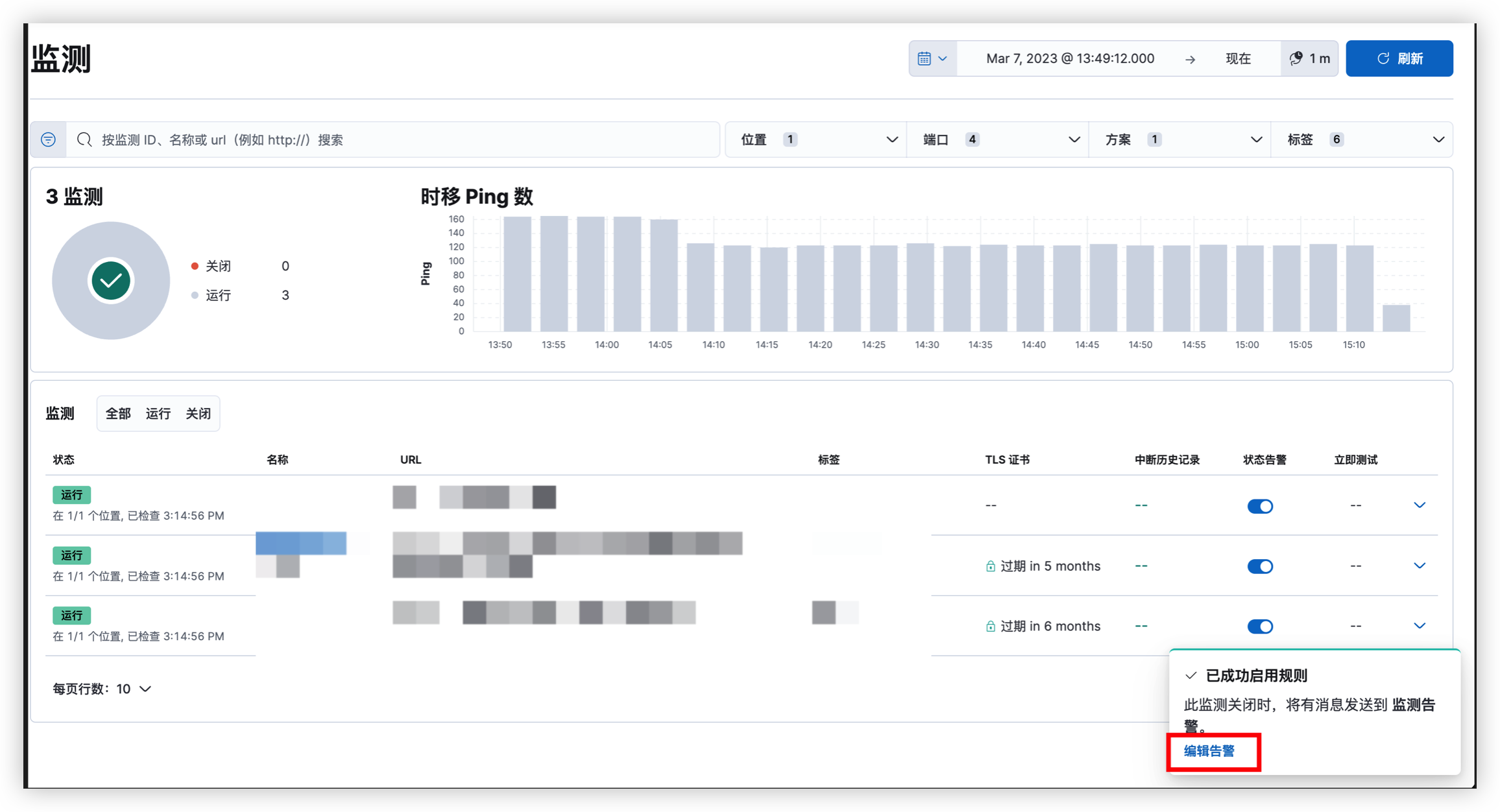

给自己添加的监测开启告警

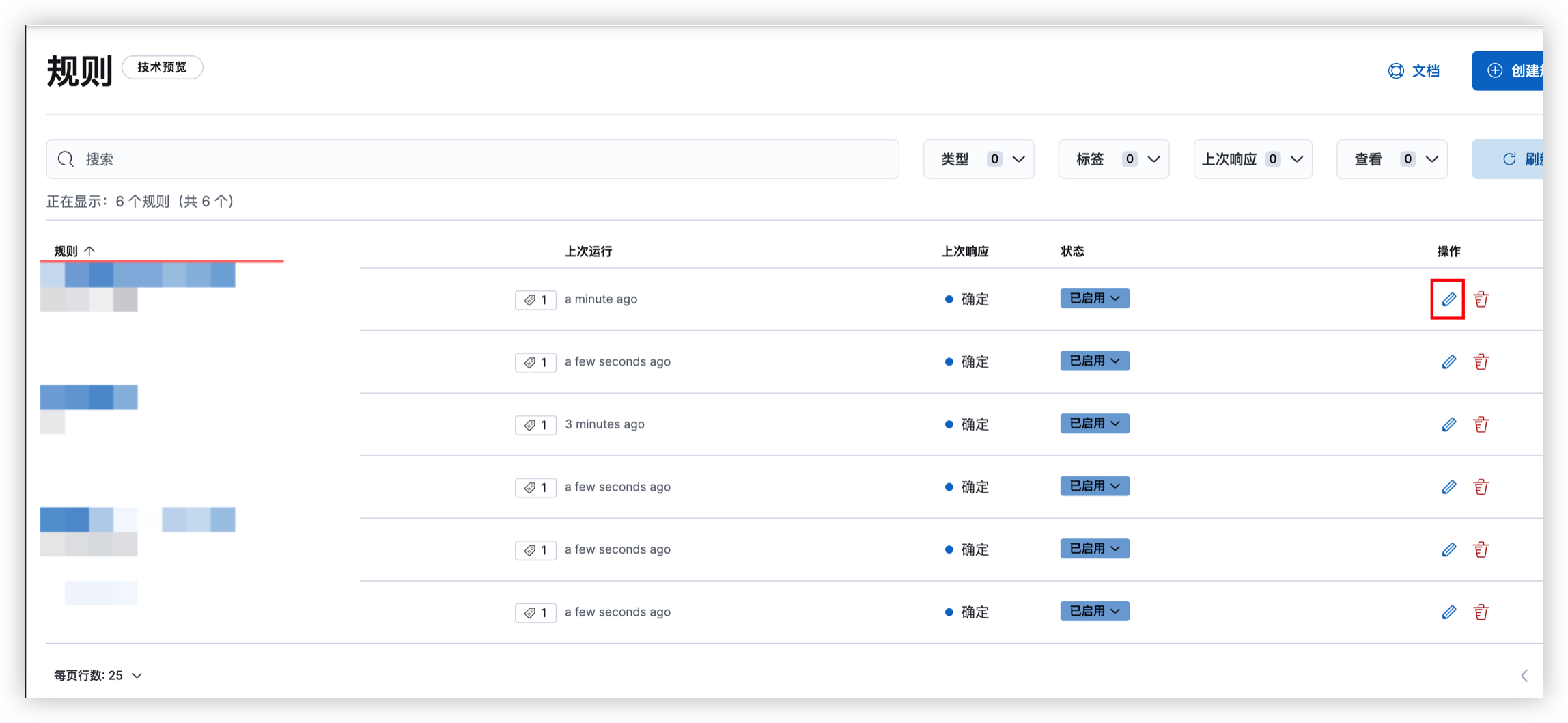

开启后编辑告警

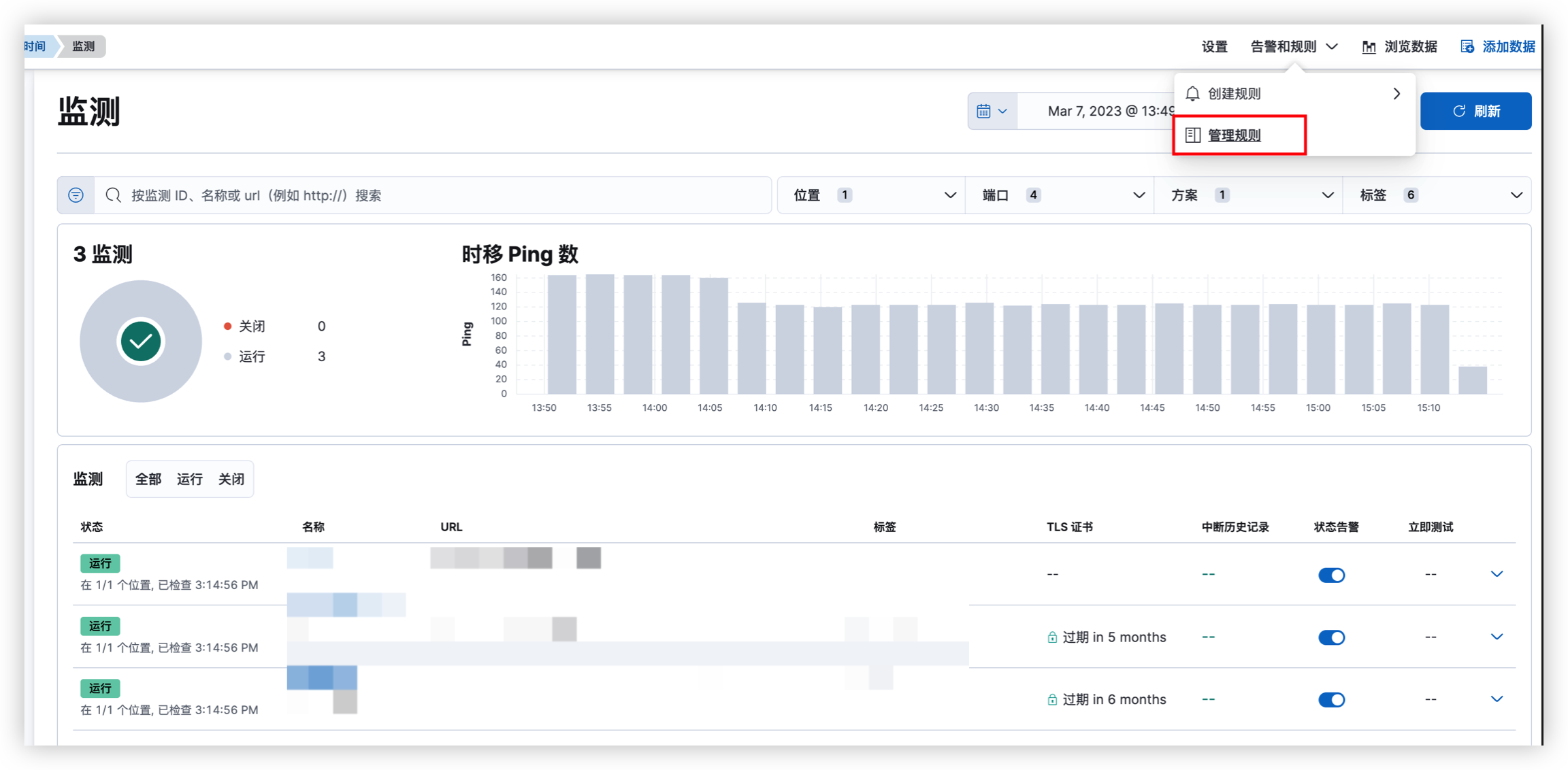

如果弹窗消失了,也可以在这里找到他

编辑告警规则

在筛选中删除原来的条件,建议使用标签的方式进行筛选

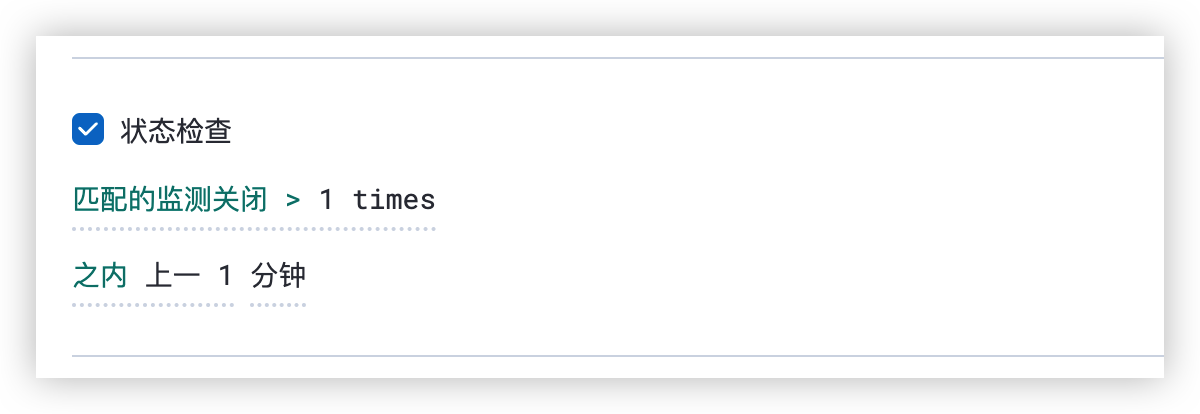

状态检查部分可以自行调整

告警的内容可以随意发挥,这里是我用的

{

"ruleName":"{{rule.name}}",

"resource":"{{context.monitorUrl}}",

"reason":"{{context.reason}}",

"viewInAppUrl":"{{context.viewInAppUrl}}",

"state":"{{alert.actionGroupName}}",

"tags":"{{rule.tags}}",

"phone":["你的手机号"]

}!

加好之后,如果是同一个标签的服务,那就不用再加了。

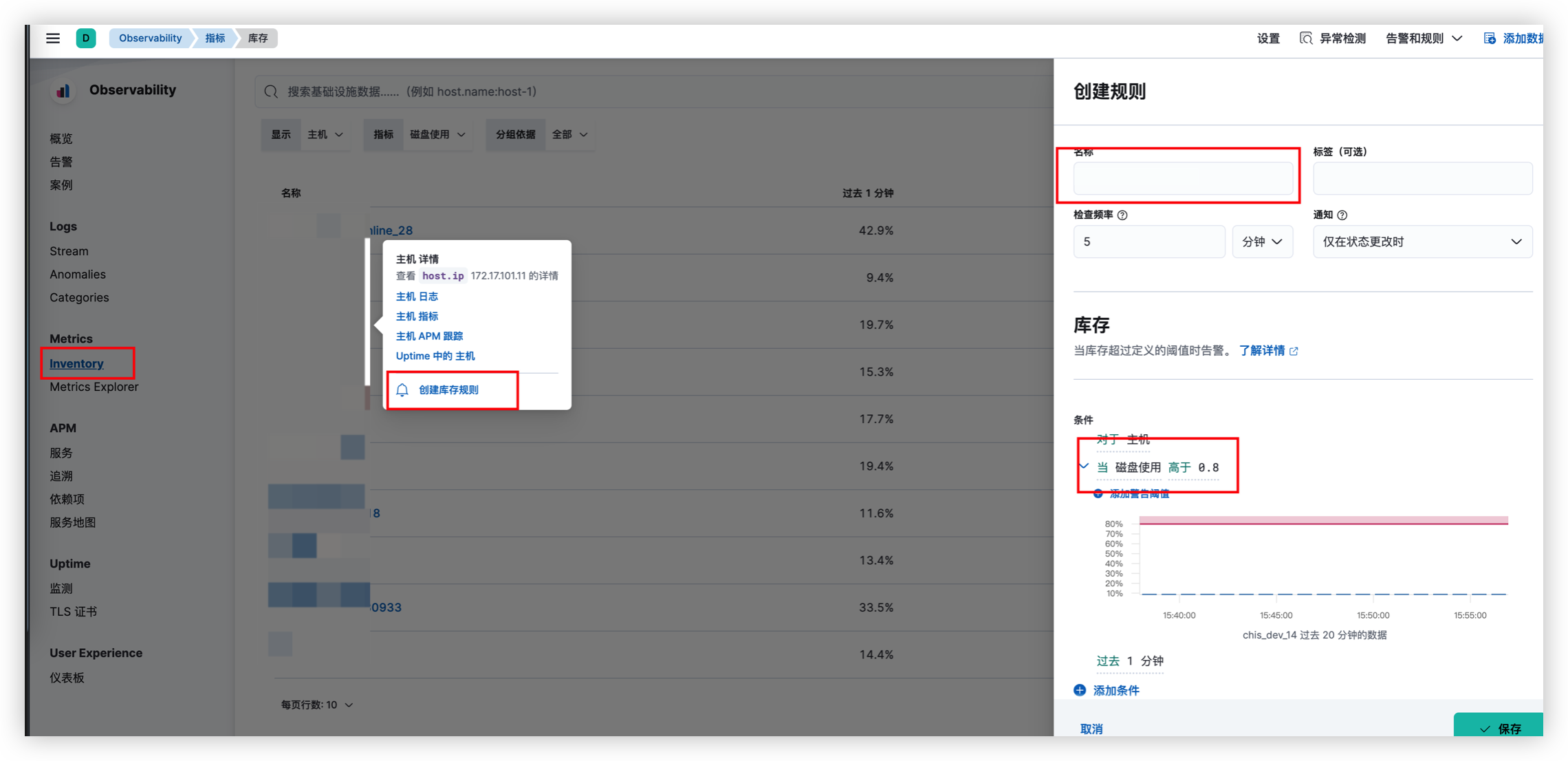

磁盘告警

在库存中添加磁盘告警规则

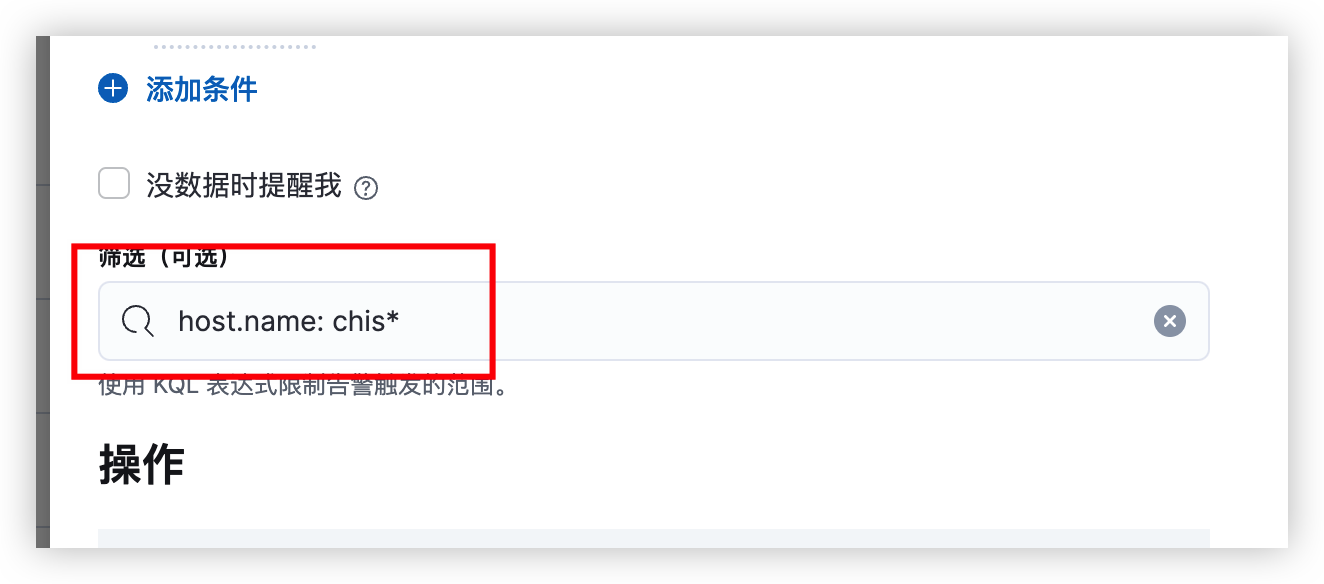

可添加筛选,以下规则表示该规则只适配chis开头的hostname

添加操作

选择磁盘告警

这里同样给出一份我用的文档

{

"ruleName": "{{rule.name}}",

"resource": "{{alert.id}}",

"reason": "{{context.reason}}",

"viewInAppUrl": "{{context.viewInAppUrl}}",

"state": "{{alert.actionGroupName}}",

"tags": "{{rule.tags}}",

"phone": ["你的手机号"]

}

安装Logstash

如果你用的免费版,那么只能使用索引+logstash+自己写一个服务的方式实现告警

安装

wget https://artifacts.elastic.co/downloads/logstash/logstash-8.3.3-amd64.deb

sudo dpkg -i logstash-8.3.3-amd64.deb

编辑配置

cd /etc/logstash/conf.d/

vim es-java.conf

input {

elasticsearch {

hosts => ["https://192.168.0.3:9200"] # Elasticsearch 服务器地址和端口

index => "kibana-alert-history-default" # 要监听的索引名称

query => '{"query":{"range":{"@timestamp":{"gte":"now-1m","lte":"now/m"}}}}' #查询前一分钟的增量数据

schedule => "* * * * *" #每分钟查询一次

scroll => "5m"

api_key => "your key"

ssl => true

ca_trusted_fingerprint => "your ca"

}

}

output {

http {

url => "http://192.168.0.13:8080/monitor/alert" # 替换为实际的 Webhooks URL

http_method => "post" # 发送 POST 请求

format => "json" # 指定请求格式为 JSON

message => '{"message": "%{message}", "timestamp": "%{[@timestamp]}" }' # 自定义请求消息体

headers => { "Content-Type" => "application/json" } # 指定请求头的 Content-Type

}

}

启动

systemctl start logstash

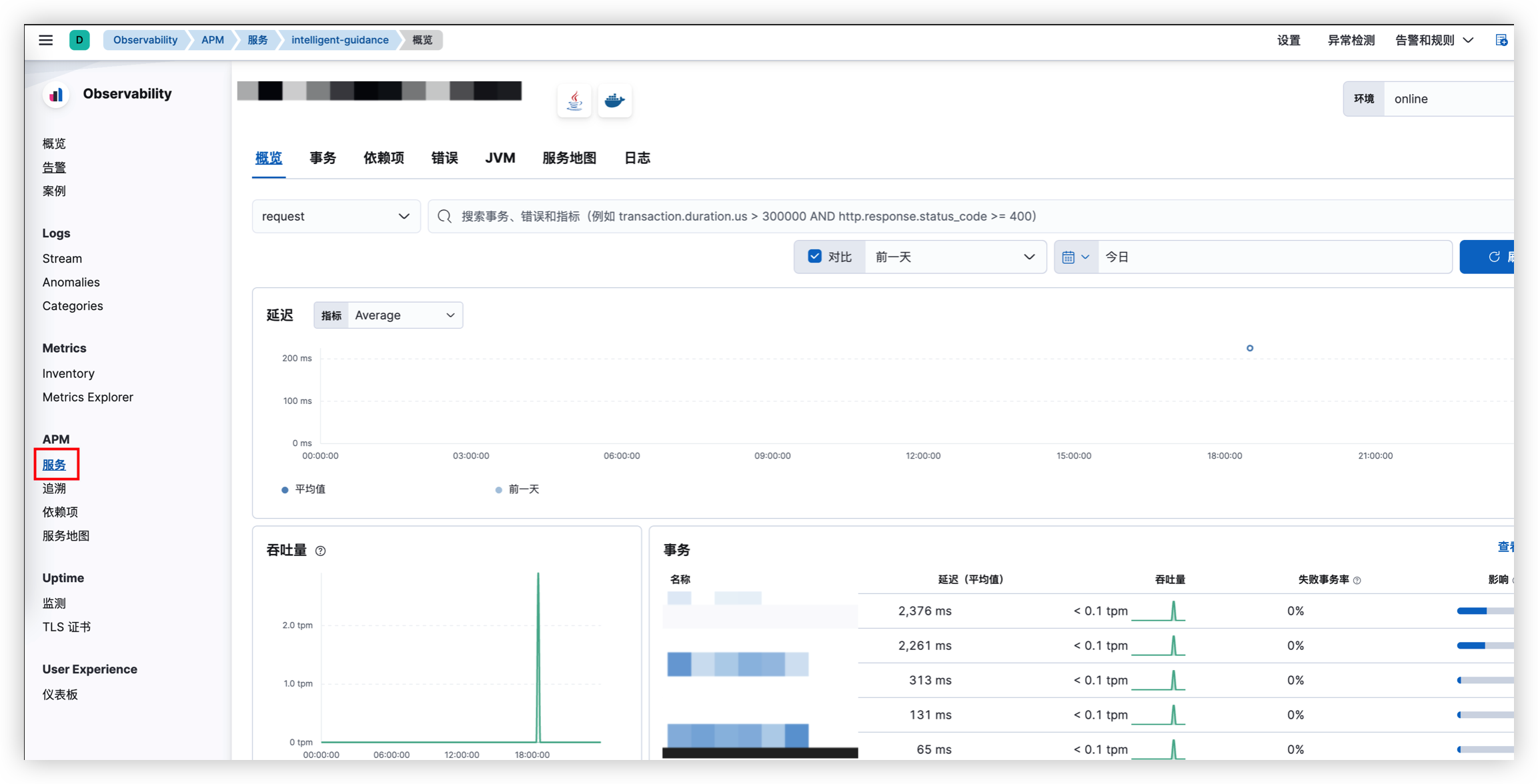

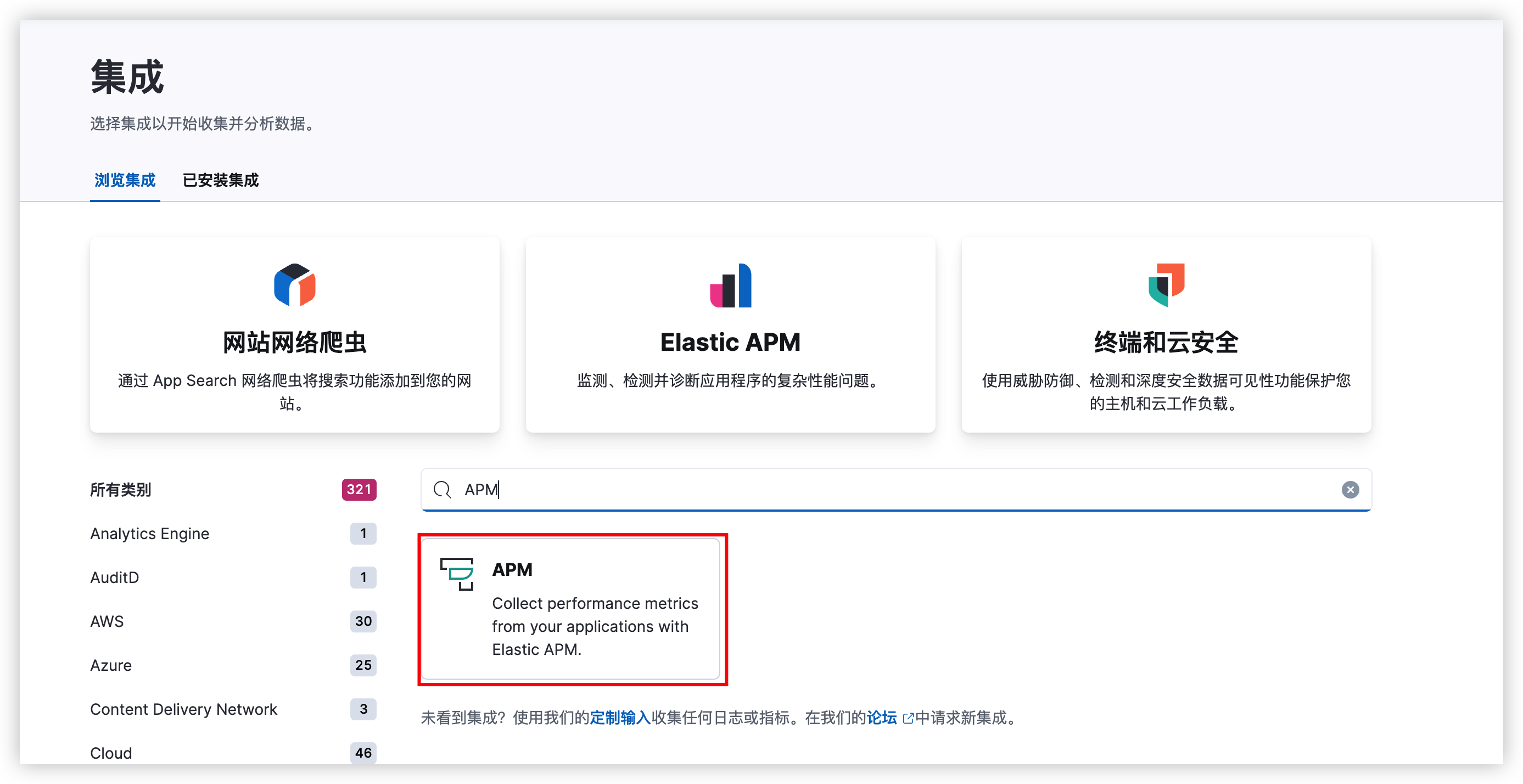

APM监控

在集成中根据指引安装即可

支持技术列表

接入APM第一点是查看该能力是否支持你的应用,比如你的应用的java版本是否为7以上,用的什么web框架?什么数据库?

详细请看官网:支持技术列表

接入APM

注:该文档只介绍spring boot应用,其他语言可以查看官网文档

1.引入依赖

引入依赖主要用于收集日志,应用中的日志是非结构化的,该依赖使得应用日志能够结构化,方便filebeat收集

<dependency>

<groupId>co.elastic.logging</groupId>

<artifactId>logback-ecs-encoder</artifactId>

<version>1.5.0</version>

</dependency>

在应用resource目录下编辑logback-spring.xml文件

在原来的logback-spring.xml文件中增加以下配置

<include resource="co/elastic/logging/logback/boot/ecs-file-appender.xml" />

<springProfile name="online">

<root level="info">

<appender-ref ref="file"/>

<appender-ref ref="ECS_JSON_FILE"/>

</root>

</springProfile>

添加一个

<appender-ref ref="ECS_JSON_FILE"/>

如果你原来定义了FILE_LOG_PATTERN,在里面增加%X, 表示追踪id占位符

参考配置:

<?xml version="1.0" encoding="UTF-8"?>

<configuration scan="true" scanPeriod="60 seconds" debug="false">

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<springProperty name="applicationName" scope="context" source="spring.application.name" />

<property name="LOG_FILE" value="logs/${applicationName}/log.out"/>

<include resource="co/elastic/logging/logback/boot/ecs-file-appender.xml" />

<!-- 日志格式 -->

<property name="CONSOLE_LOG_PATTERN"

value="%clr(%d{${LOG_DATEFORMAT_PATTERN:-yyyy-MM-dd HH:mm:ss.SSS}}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%c){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}"/>

<property name="FILE_LOG_PATTERN"

value="%d{${LOG_DATEFORMAT_PATTERN:-yyyy-MM-dd HH:mm:ss.SSS}} ${applicationName} %X ${LOG_LEVEL_PATTERN:-%5p} ${PID:- } --- [%t] %c : %.-1024m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}"/>

<!--输出到控制台-->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

</encoder>

</appender>

<!--输出到文件-->

<appender name="file" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.%i.gz</fileNamePattern>

<!-- 日志保留天数 -->

<maxHistory>7</maxHistory>

<!-- 每个日志文件的最大值 -->

<maxFileSize>10MB</maxFileSize>

</rollingPolicy>

<encoder>

<pattern>${FILE_LOG_PATTERN}</pattern>

</encoder>

</appender>

<!-- (多环境配置日志级别)根据不同的环境设置不同的日志输出级别 -->

<springProfile name="local">

<root level="info">

<appender-ref ref="console"/>

</root>

</springProfile>

<springProfile name="test">

<root level="info">

<appender-ref ref="console"/>

</root>

</springProfile>

<springProfile name="dev">

<root level="info">

<appender-ref ref="file"/>

<appender-ref ref="ECS_JSON_FILE"/>

</root>

</springProfile>

<springProfile name="staging">

<root level="info">

<appender-ref ref="file"/>

<appender-ref ref="ECS_JSON_FILE"/>

</root>

</springProfile>

<springProfile name="online">

<root level="info">

<appender-ref ref="file"/>

<appender-ref ref="ECS_JSON_FILE"/>

</root>

</springProfile>

</configuration>

以上

local、staging、online为应用环境名,根据实际情况更改即可

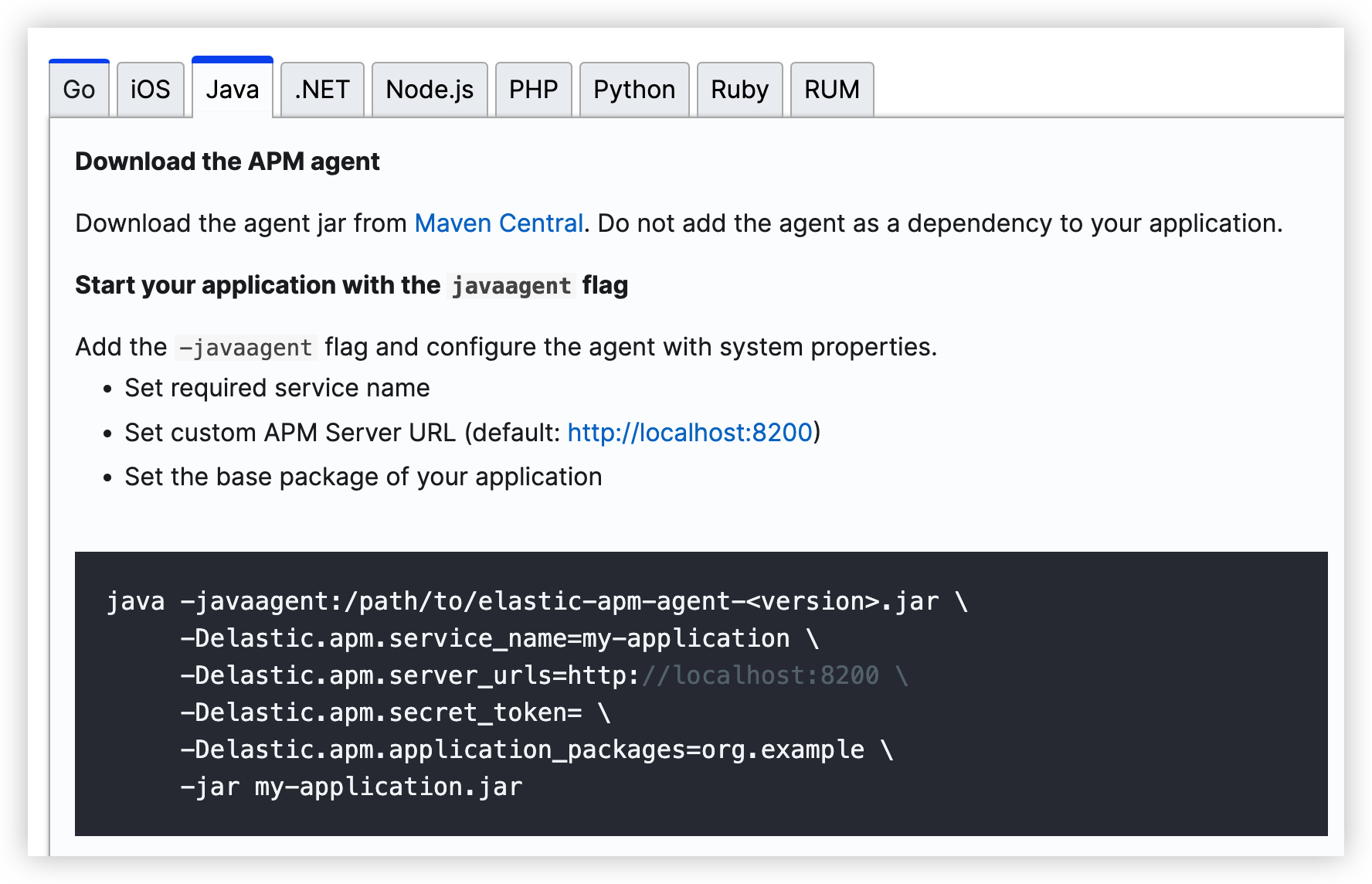

2、接入

在服务器上下载agent

mkdir -p /data/apm/agent && cd /data/apm/agent

wget https://search.maven.org/remotecontent?filepath=co/elastic/apm/elastic-apm-agent/1.36.0/elastic-apm-agent-1.36.0.jar -O elastic-apm-agent.jar

java -jar方式

增加jvm参数

-javaagent:/path/to/elastic-apm-agent-1.36.0.jar -Delastic.apm.service_name=user-server -Delastic.apm.server_urls=http://12:8200 -Delastic.apm.secret_token=xxx -Delastic.apm.environment=production -Delastic.apm.application_packages=com.my.user

/path/to/elastic-apm-agent-1.36.0.jar:你的agent路径

elastic.apm.server_urls:apm服务地址

elastic.apm.service_name:你的服务名称

elastic.apm.environment:你的环境

elastic.apm.application_packages:你的包路径

elastic.apm.secret_token: 密钥

容器方式

修改Dockerfile文件, 增加JAVA_AGENT参数

案例:

FROM openjdk:8-jdk-oracle

RUN mkdir /app

ENV SERVER_PORT=1113 \

JAVA_AGENT=-javaagent:/app/agent/elastic-apm-agent.jar

COPY target/youapp.jar /app/app.jar

java -Djava.security.egd=file:/dev/./urandom ${JAVA_AGENT} ${JVM_XMS} ${JVM_XMX} ${JVM_XMN} ${JVM_OPTS} ${JVM_GC} -jar /app/app.jar

修改docker-compose文件,增加apm参数

案例:

version: '3.5'

services:

user-server:

restart: always

image: user-server

container_name: user-server

environment:

ELASTIC_APM_SERVICE_NAME: user-server

ELASTIC_APM_APPLICATION_PACKAGES: com.my.user

ELASTIC_APM_SERVER_URL: http://127.0.0.1:8200

ELASTIC_APM_SECRET_TOKEN: xxx

ELASTIC_APM_ENVIRONMENT: production

ports:

- 8080:8080

volumes:

- /var/log/server:/logs

- /data/apm/agent:/app/agent

networks:

- common

networks:

common:

external: true

增加参数:ELASTIC_APM_SERVICE_NAME、ELASTIC_APM_APPLICATION_PACKAGES、ELASTIC_APM_SERVER_URL、ELASTIC_APM_SECRET_TOKEN、ELASTIC_APM_ENVIRONMENT

收集日志

进入数据盘

cd /data

下载安装

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-8.3.3-linux-x86_64.tar.gz

tar xzvf filebeat-8.3.3-linux-x86_64.tar.gz

mv filebeat-8.3.3-linux-x86_64 filebeat

cd filebeat

编辑配置文件

mv filebeat.yml filebeat.yml.bak

vim filebeat.yml

filebeat.inputs:

- type: filestream

id: beat-log

enabled: true

# 你的日志文件

paths:

- /var/log/server/**/*.json

parsers:

- ndjson:

overwrite_keys: true

add_error_key: true

expand_keys: true

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

host: "http://127.0.0.1:5601"

output.elasticsearch:

hosts: ["127.0.0.1:9200"]

protocol: "https"

api_key: "your api key"

ssl:

enabled: true

ca_trusted_fingerprint: "your ca"

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

初始化,要等几分钟

./filebeat setup -e

启动

sudo chown root filebeat.yml

nohup sudo ./filebeat -e &

查看